I ran into an unknown GUID while using Shellbags Explorer (SBE) recently. Here's what I did to confirm what it was -- with some testing and new findings along the way. All of this was done on Windows 10. Thanks to Eric Zimmerman and Joachim Metz for quick response times and details.

An unmapped GUID in SBE.

Scenario

You have an unknown GUID. You have an idea of what it is, but want to confirm.

The Solution

I had an inkling that this GUID was related to Adobe Creative Cloud. Usually, you'll be able to see some folders under the unknown GUID within SBE that are specific enough to pinpoint the application responsible -- doubly so if you're analyzing your own machine and can recognize the folders. With Creative Cloud in mind, let's confirm.

First, boot up a testing virtual machine and install the Adobe Creative Cloud Desktop application. The free Microsoft VMs for IE/Edge testing are useful for this.

Installing and updating the Adobe Creative Cloud desktop application.

Upon installation of the application and sign-in, we can see that a new library called "Creative Cloud Files" shows up in the Windows Explorer sidebar (the default location of the folder is in the root of the user's profile).

Observing new "Creative Cloud Files" library added to Explorer sidebar.

Before interacting with this folder, let's do a sanity check baseline on what our UsrClass.dat looks like in SBE. At this point, we shouldn't see any shellbags entries for this location.

Recursive view of shellbags entries within SBE. No "Creative Cloud Files" entries.

As expected, there are no entries for the "Creative Cloud Files" location. But before we move on any further, let's grab the timestamps (standard information) for the directory. This will be important later.

FTK Imager Lite showing MACE times for the "Creative Cloud Files" folder.

Now that we've noted those timestamps, let's interact with the folder.

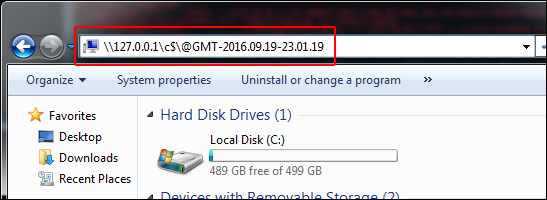

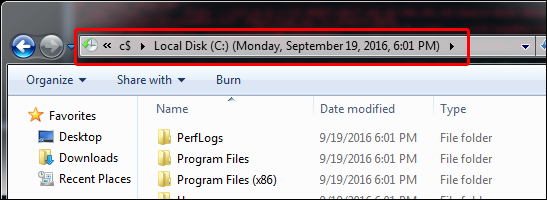

Interacting with the "Creative Cloud Files" folder via Windows Explorer.

There should now be a shellbags entry for this folder in the user's UsrClass.dat. Pull that, along with its .LOG files (if necessary), and open it up with SBE.

Observing the shellbags entry for the "Creative Cloud Files" folder with SBE.

Looks like we got what we came for...but we're not done just yet.

After running this same test on another Windows 10 machine, I noticed something strange: the last section of the GUID wasn't the same.

0e270daa-1be6-48f2-ac49-6117167f7994

Typically, these GUIDs will stay consistent from system to system, since most of the ones you'll come across during shellbags analysis are built-in Known Folder GUIDs. But it turns out that software vendors can extend this set of known folders by registering their own [6] [7].

While the final section of the GUID seems to be different on each machine, the first sections (0e270daa-1be6-48f2-ac49) seem to have remained consistent since at least 2015 (Google it).

With that out of the way, let's dig deeper into the actual makeup of the unmapped GUID entry, out of curiosity. I happened to notice that the unmapped GUID entry looked very similar to the existing "Dropbox" entry on another test machine (for which the GUID was mapped). As I compared the two, I noticed that there were three Windows FILETIME timestamps in both of them. I couldn't find any explanation for these, so I referenced Eric Zimmerman's "Plumbing the Depths: ShellBags" presentation and Joachim Metz's Windows Shell Item format specification.

Using SBE's data interpreter to identify three FILETIME timestamps.

Eric's and Joachim's documentation were invaluable for walking through the hex. They serve as perfect foundations for digging into the file formats. Still, I wasn't able to determine why I was seeing three timestamps instead of the two that were showing in the "Details" tab. And I wasn't seeing a 0xbeef0026 extension block in the specification or any reference to it when the class type indicator for the entry is 0x1f (a root folder shell item).

With this information in hand, it was time to start piecing it all together.

If we take a look at the above animation, as well as the timestamps of the "Creative Cloud Files" folder that we pulled with FTK Imager earlier, you'll notice that the FILETIME timestamps in the shellbags entry match up exactly with the SI MACE times of the "Creative Cloud Files" folder at the time we interacted with it.

By creating a color coded template of this entry, we can come close to attributing every byte of the entry to something including the timestamps:

Hex of unmapped "Creative Cloud Files" shellbags entry

0000 0000: 3a 00 1f 42 aa 0d 27 0e

0000 0008: e6 1b f2 48 ac 49 61 17

0000 0010: 16 7f 79 94 26 00 01 00

0000 0018: 26 00 ef be 11 00 00 00

0000 0020: 5e bf 26 f7 bf 1f d4 01

0000 0028: 3e 9b 02 f8 bf 1f d4 01

0000 0030: 39 10 8c fb bf 1f d4 01

0000 0038: 14 00 00 00

0000 0008: e6 1b f2 48 ac 49 61 17

0000 0010: 16 7f 79 94 26 00 01 00

0000 0018: 26 00 ef be 11 00 00 00

0000 0020: 5e bf 26 f7 bf 1f d4 01

0000 0028: 3e 9b 02 f8 bf 1f d4 01

0000 0030: 39 10 8c fb bf 1f d4 01

0000 0038: 14 00 00 00

Offset 0x00: Shell Item Size (42d)

Offset 0x02: Class Type Indicator (0x1F - Root Folder Shell Item)

Offset 0x03: Sort Index (Libraries)

Offset 0x04: GUID (0e270daa-1be6-48f2-ac49-6117167f7994)

Offset 0x14: Extension Block Size (38d)

Offset 0x16: Extension Version (?)

Offset 0x18: Extension Block Signature (0xbeef0026)

Offset 0x1C: ????

Offset 0x20: Windows FILETIME 1 (Creation)

Offset 0x28: Windows FILETIME 2 (Last Modified)

Offset 0x30: Windows FILETIME 3 (Last Accessed)

Offset 0x38: ????

The bottom line is that, by performing behavioral analysis and walking through the file format at the hex level, we were not only able to find what the unknown GUID maps back to, but we were also able to (a) identify a set of SI MACE FILETIMEs for an undocumented extension block (0xbeef0026) and (b) determine that software-vendor-generated/registered GUIDs may not be consistent all the time (at least the last section, in this example).

The larger question is: are the inconsistent GUIDs we see specific to Creative Cloud or are there more instances of this inconsistency? Every "Creative Cloud Files" GUID I have seen -- from three different machines tested -- show a different end-section of the GUID. Dropbox seems to have consistent GUIDs across machines. Could Creative Cloud be generating the end-section of the "Creative Cloud Files" GUID based on something inconsistent like username?

And just like that, we have another hypothesis that we can test. Using the same VM from before, I created a new user and gave it the same name as a user on one of my other test machines. After signing in using the Creative Cloud desktop application, the new "Creative Cloud Files" folder was created for my new user account. Following the same steps as above, the shellbags entry was created for it, and it was observed that the GUIDs (including the last section) for the "Creative Cloud Files" location on two different machines, using the same username, were the same!

If you were to create a user named "IEUser" on your machine, and run through this whole process, you'll find that the GUID for "Creative Cloud Files" will be 0e270daa-1be6-48f2-ac49-6117167f7994. Likewise, for the "4n6k" user, you'll find the GUID to be 0e270daa-1be6-48f2-ac49-fc258b405d45. Also, this is case insensitive, so user "4N6K" will result in the same GUID.

With that said, it may be necessary to match the "Creative Cloud Files" location (and any other application GUIDs that exhibit the same behavior) to the first few sections of the GUID (0e270daa-1be6-48f2-ac49-) instead of the whole thing.

-4n6k

References

1) https://docs.microsoft.com/en-us/windows/desktop/shell/known-folders

2) https://ericzimmerman.github.io/

3) https://developer.microsoft.com/en-us/microsoft-edge/tools/vms/

4) https://www.adobe.com/creativecloud/desktop-app.html

5) https://docs.microsoft.com/en-us/windows/desktop/shell/knownfolderid

6) https://docs.microsoft.com/en-us/windows/desktop/shell/how-to-extend-known-folders-with-custom-folders

7) https://www.winhelponline.com/blog/add-custom-folder-this-pc-navigation-pane-windows/

8) https://www.sans.org/summit-archives/file/summit-archive-1492184337.pdf

9) https://github.com/libyal/libfwsi/blob/master/documentation/Windows%20Shell%20Item%20format.asciidoc